Brier, Glenn W. 1950. “Verification of forecasts expressed in terms of probabilities.” Monthly Weather Review 78 (1): 1–3.

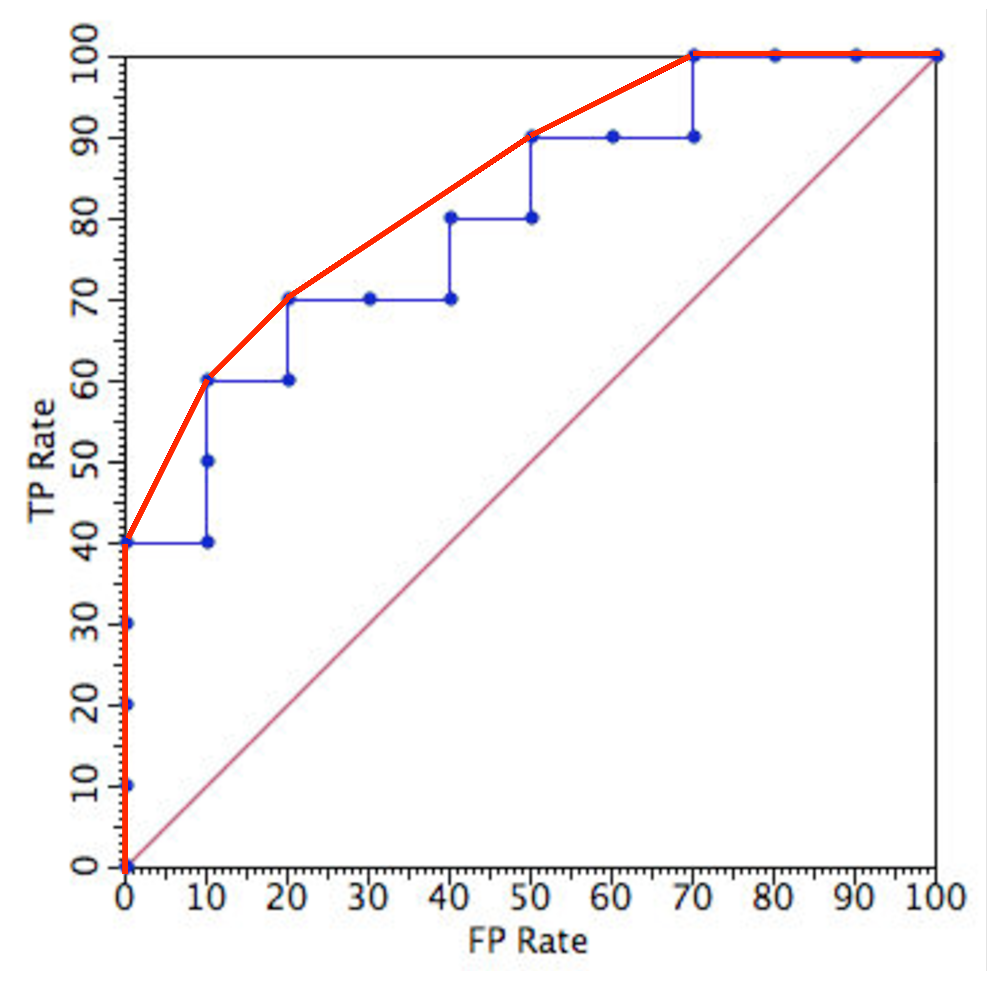

Fawcett, Tom, and Alexandru Niculescu-Mizil. 2007. “PAV and the ROC convex hull.” Machine Learning 68 (1): 97–106.

Flach, Peter A. 2016. “ROC Analysis.” In Encyclopedia of Machine Learning and Data Mining. Springer.

Guo, Chuan, Geoff Pleiss, Yu Sun, and Kilian Q Weinberger. 2017. “On Calibration of Modern Neural Networks.” In 34th International Conference on Machine Learning, 1321–30.

Kull, Meelis, Miquel Perello-Nieto, Markus Kängsepp, Telmo Silva Filho, Hao Song, and Peter Flach. 2019. “Beyond temperature scaling: Obtaining well-calibrated multiclass probabilities with Dirichlet calibration.” In Advances in Neural Information Processing Systems (NIPS’19), 12316–26.

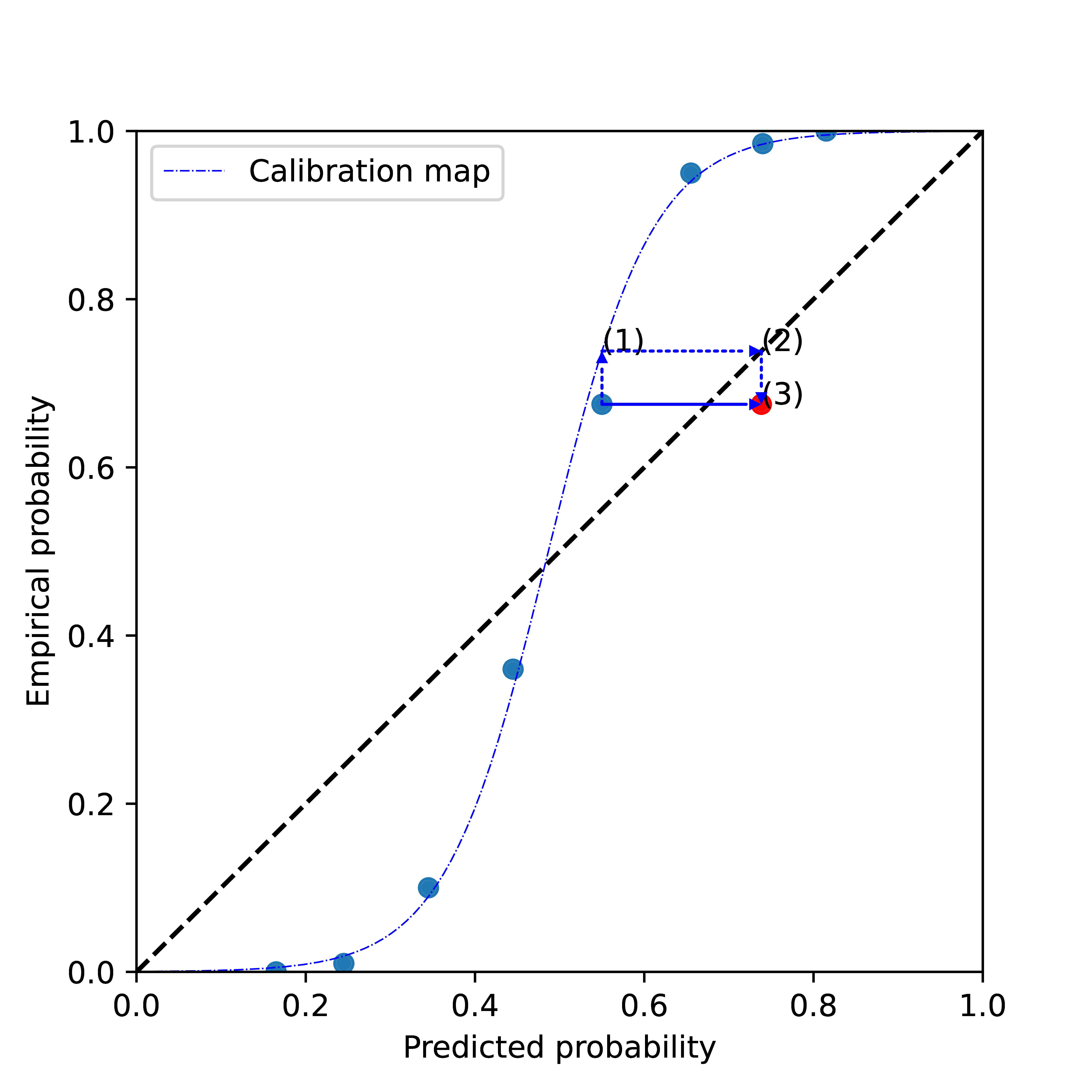

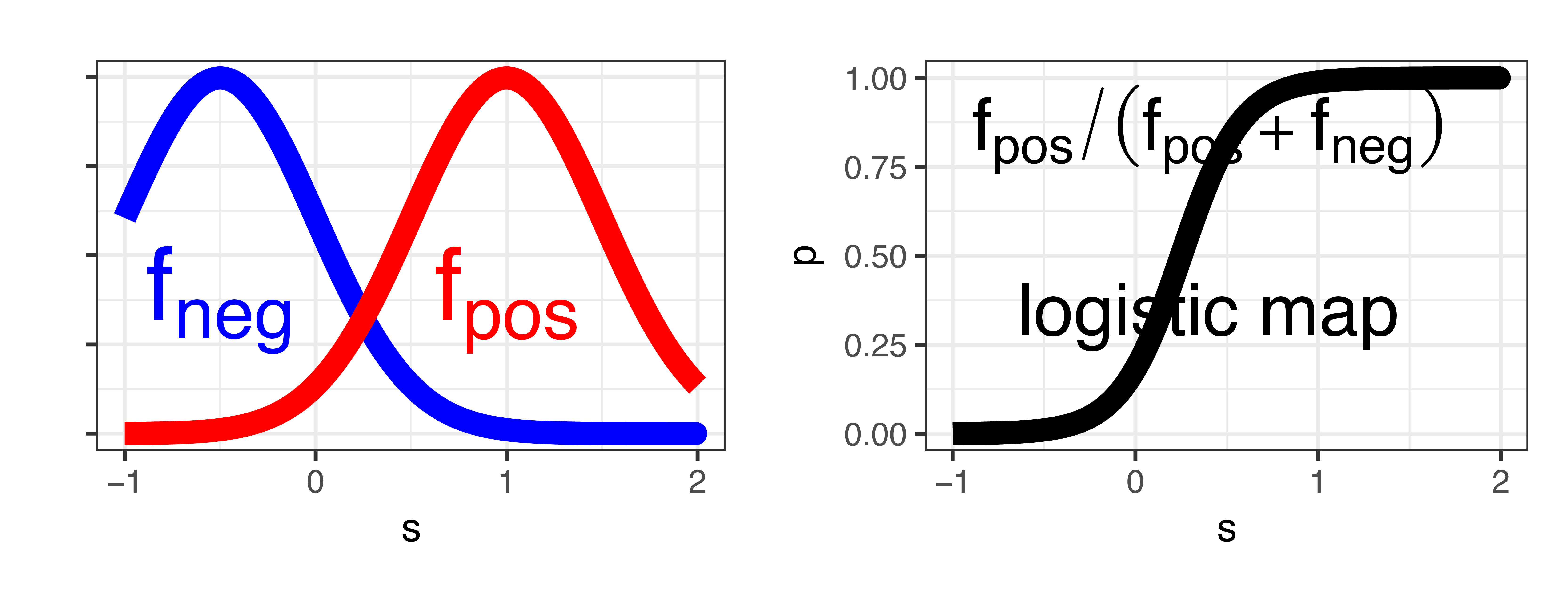

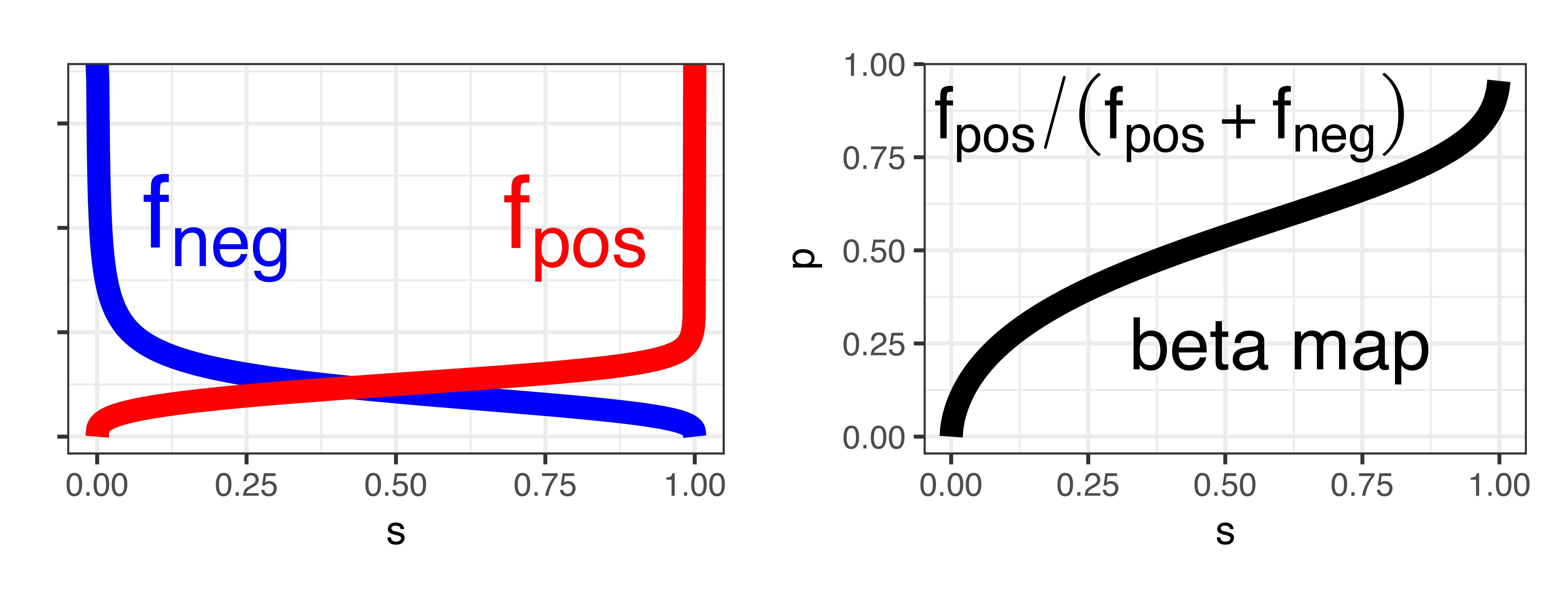

Kull, Meelis, Telmo M. Silva Filho, and Peter Flach. 2017. “Beyond Sigmoids: How to obtain well-calibrated probabilities from binary classifiers with beta calibration.” Electronic Journal of Statistics 11 (2): 5052–80.

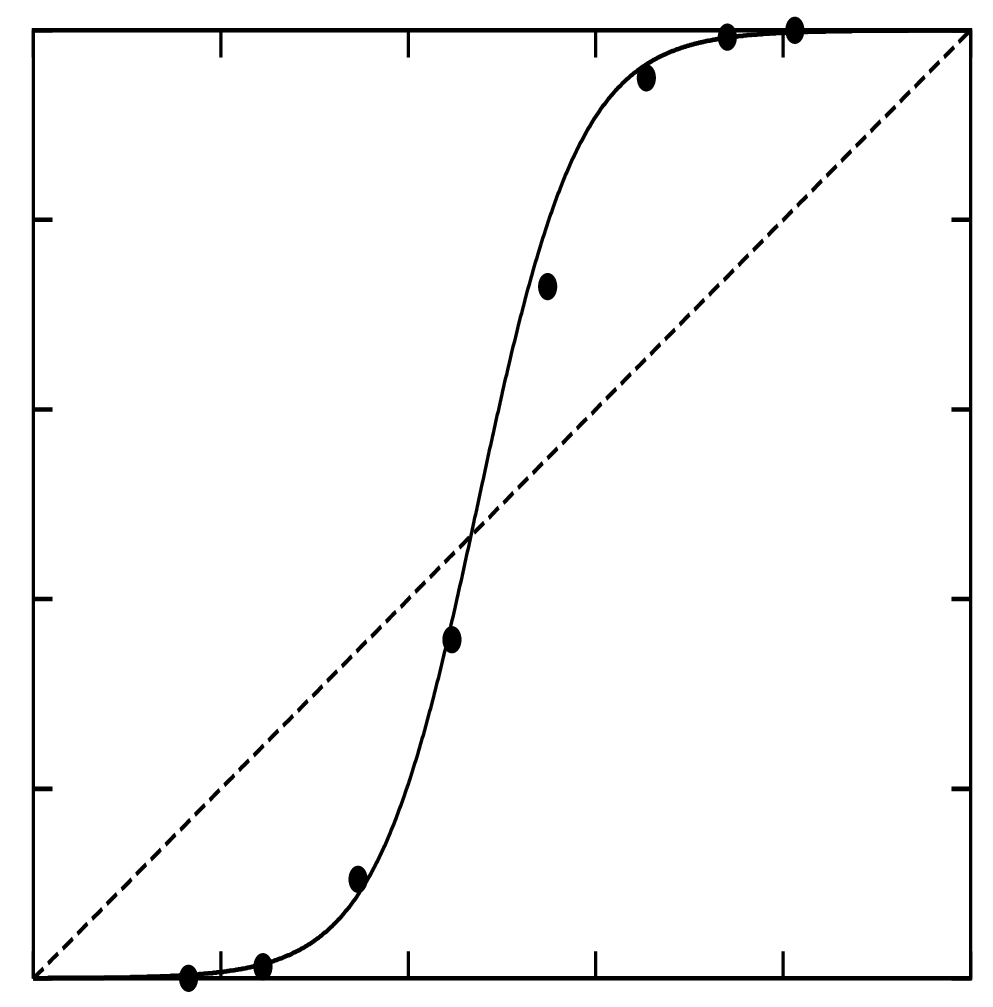

Niculescu-Mizil, Alexandru, and Rich Caruana. 2005. “Predicting good probabilities with supervised learning.” In 22nd International Conference on Machine Learning (ICML’05), 625–32. ACM Press.

Platt, JC. 2000. “Probabilities for SV Machines.” In Advances in Large-Margin Classifiers, edited by Alexander J. Smola, Peter Bartlett, Bernhard Schölkopf, and Dale Schuurmans, 61—–74. MIT Press.

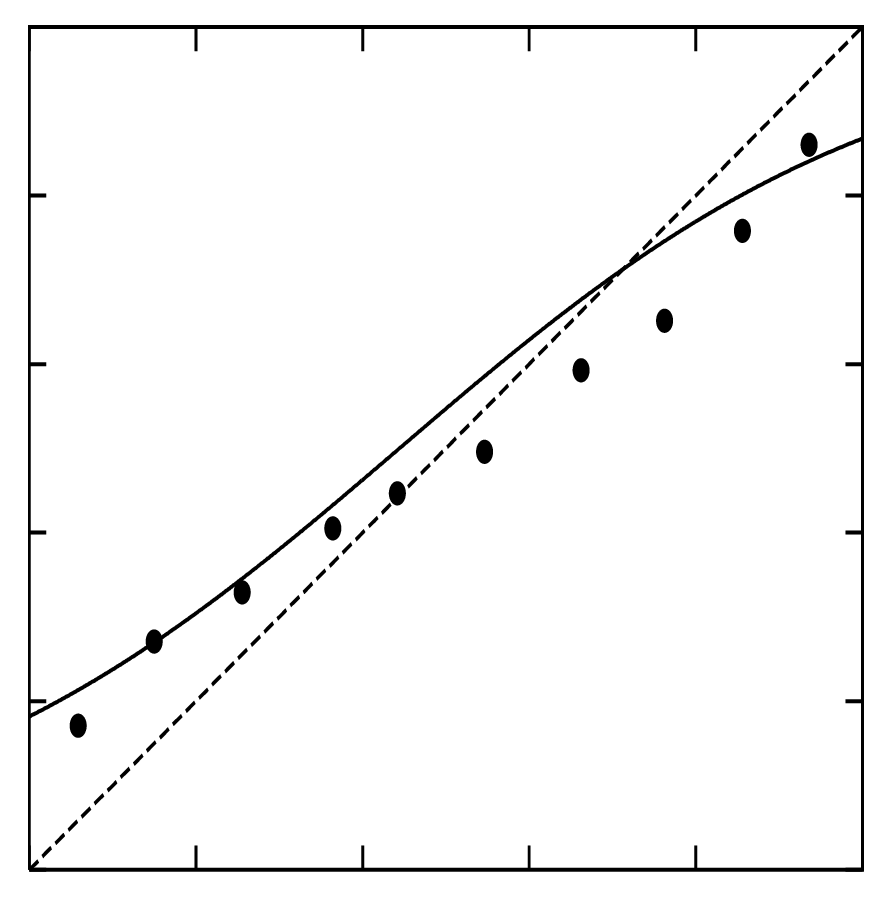

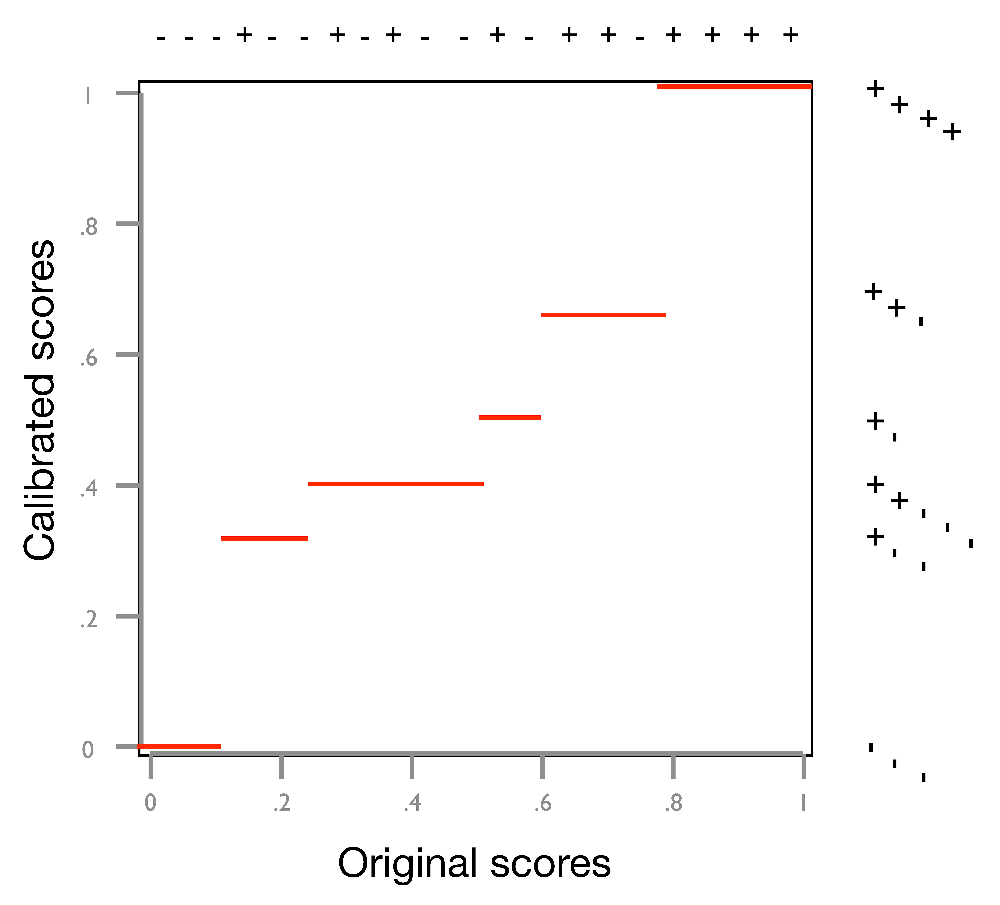

Zadrozny, Bianca, and Charles Elkan. 2001. “Obtaining calibrated probability estimates from decision trees and naive Bayesian classifiers.” In 18th International Conference on Machine Learning (ICML’01), 609—–616.

———. 2002. “Transforming Classifier Scores into Accurate Multiclass Probability Estimates.” In 8th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining - KDD ’02, 694—–699. ACM Press.

-yellow)

-orange)